Docker for Development

Docker revolutionized the software industry by changing how we deploy and manage software. However, many people associate Docker with CI/CD and server management tasks, overlooking its significant impact on software development. In reality, a substantial portion of Docker’s power lies in its utility for developing software, where it provides real value by streamlining and simplifying the development process.

This is particularly beneficial for junior or non-tech people who wants to make use of software products without getting into the complexities of package management, version conflicts, and operating system-related overhead. Containerization offers a straightforward yet potent solution.

Docker as a Package Manager

I remember trying to run a simple database server for a school project. I came across with SQLite which offers a simple solution. I really liked the idea of being able to represent my whole database state with a file and portability of it. I used it for a while. But when I wanted to take things to the next level unfortunately I figured out that SQLite lacks many of the capabilities of the modern DBMS had.

At that time I was using a windows OS so I chose MSSQL for a more industry-grade alternative, but with MSSQL I had to also install and run an additional wizard for some sort of GUI. I ended up downloading GB’s of packages etc. just to host a simple 5-10 table database for a CRUD application. I was configuring some firewalls and optimizing some parameters but the real problem was the software I was trying to use was offering me functionalities more than I was asking.

This is actually a common theme you come across when you try to get up new technologies in software field. They usually have a lot of configuration options, optimization parameters etc. because there are literally billion dollar companies that rely on that particular software. So you mostly find yourself configuring your installation for a setting that you’ll definitely not going to need.

Enter Docker

Then I came across with dockerized installations. These images of DBMS was offering me the portability of SQLite and functionality of a prodcution level DBMS. That was the moment I get addicted to docker. All I had to do was to pull the image, bind some ports -and some configuration files if I need- then I was ready to go. I was able to see the status of my DB with docker ps and I could easily spin up and down it.

Never install locally, unless you know exactly what you are doing

Another point was I was mostly messing up with local installations. I was somehow finding wrong PPA package etc. and my package manager was yelling me mistakes in a langugage I have no idea with it.

Nowadays every product has a docker installation, use it.

It is impossible to not to mess up with local package managers and they are OS specific

Its a step towards IaC

It is compact and portable

Easier to get help with it. Just copy paste your compose file to ChatGPT

Compose is making things easier

Most of the time all you need to do is

- Pull an image

- write a configuration file

- bind it to container

- bind exposed ports if needed

- Beware of the dockers user permissions

and you are good to go

Let’s consider a scenario

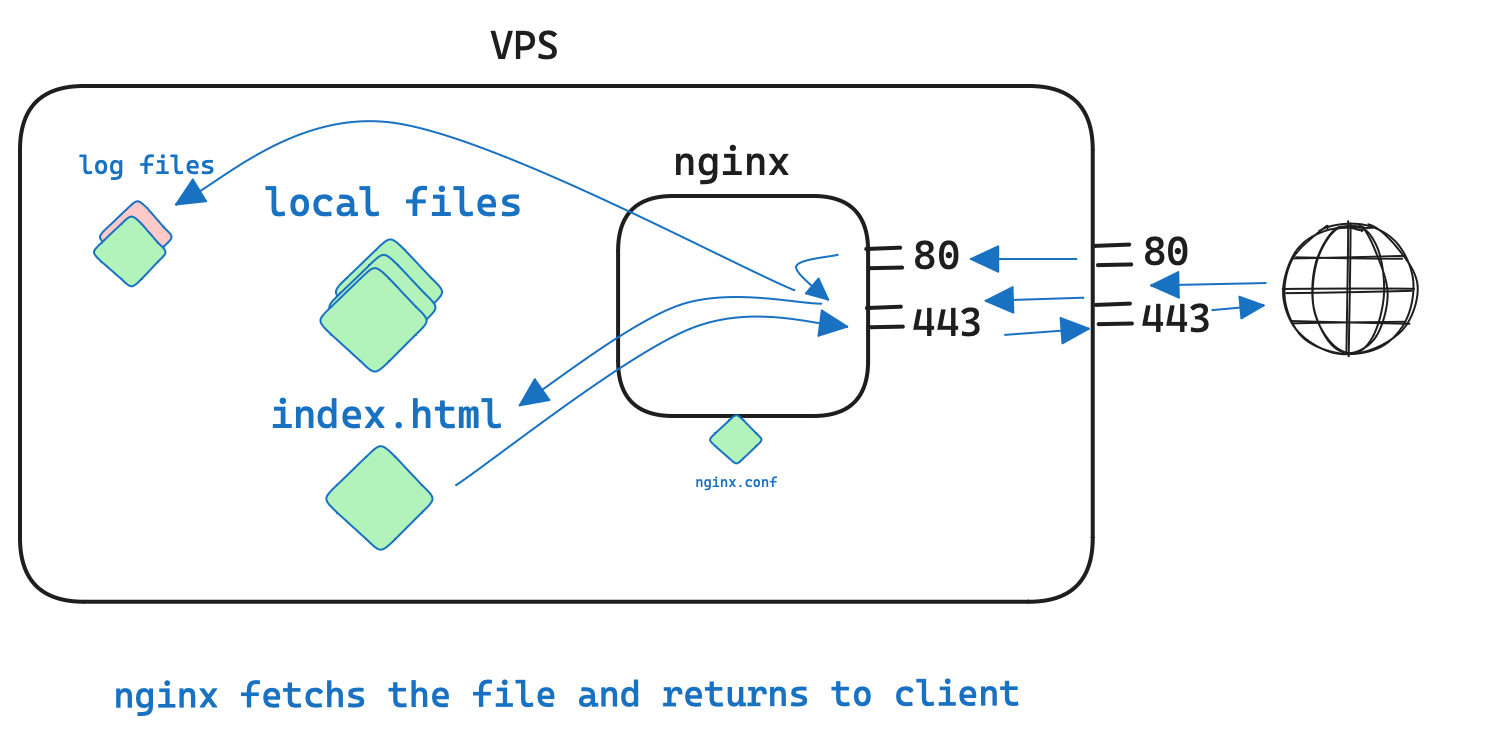

Let’s begin with something simple, say we want to host a personal website. For that we designed an index.html page but we want to host this website on a machine we access to and not some rental solution like github pages or other no-code website builders.

For this we will need a VPS and nginx, albeit this is not a post about reverse proxies or servers I need to touch on them quickly so bare with me on this.

We need a VPS to be able to accept requests from external internet, That is because of 2 reasons:

Your home PC’s external IP is subject to change. We need a static IP to send our clients that will be available 24/7. Secondly, VPS has something called NAT configured in a way that will be able it to accept external requests. Actually, you also can accept http requests with your home pc from external network with solutions like ngrok or frp but this is for another post.

Once we got externally available machine, we need to install a web server to it. Nginx is a both a web server ( a 24/7 open program that accepts http requests and return files we want to return to our clients if they are eligible ) and a reverse proxy. A reverse proxy is something like a gatekeeper of our server. In an ideal scenario any request that is coming from the outside world should go to our Reverse proxy then reverse proxy should decide where to direct that request inside our machine.

Our scenario is simple, we have an index.html page that we want to return our clients when they connect to our IP. Then all we need to tell nginx is to look at /x/y/z directory when you receive a request with /x path.

80 and 443 ports are reserved for http and https protocols respectively. HTTPS/SSL is not a topic of this blog post but all you need to do is buy a domain name and use certbot. It will handle 80-443 port transitions easily for you.

Above configuration is quite working but lets see why you may want to use a dockerized approach instead:

You need to install locally with the nginx version that is compatible with your system.

You need to enable disable firewall configuration and try not to forget a unnecessarily open port.

You need to monitor nginx regularly to make sure everything is working fine

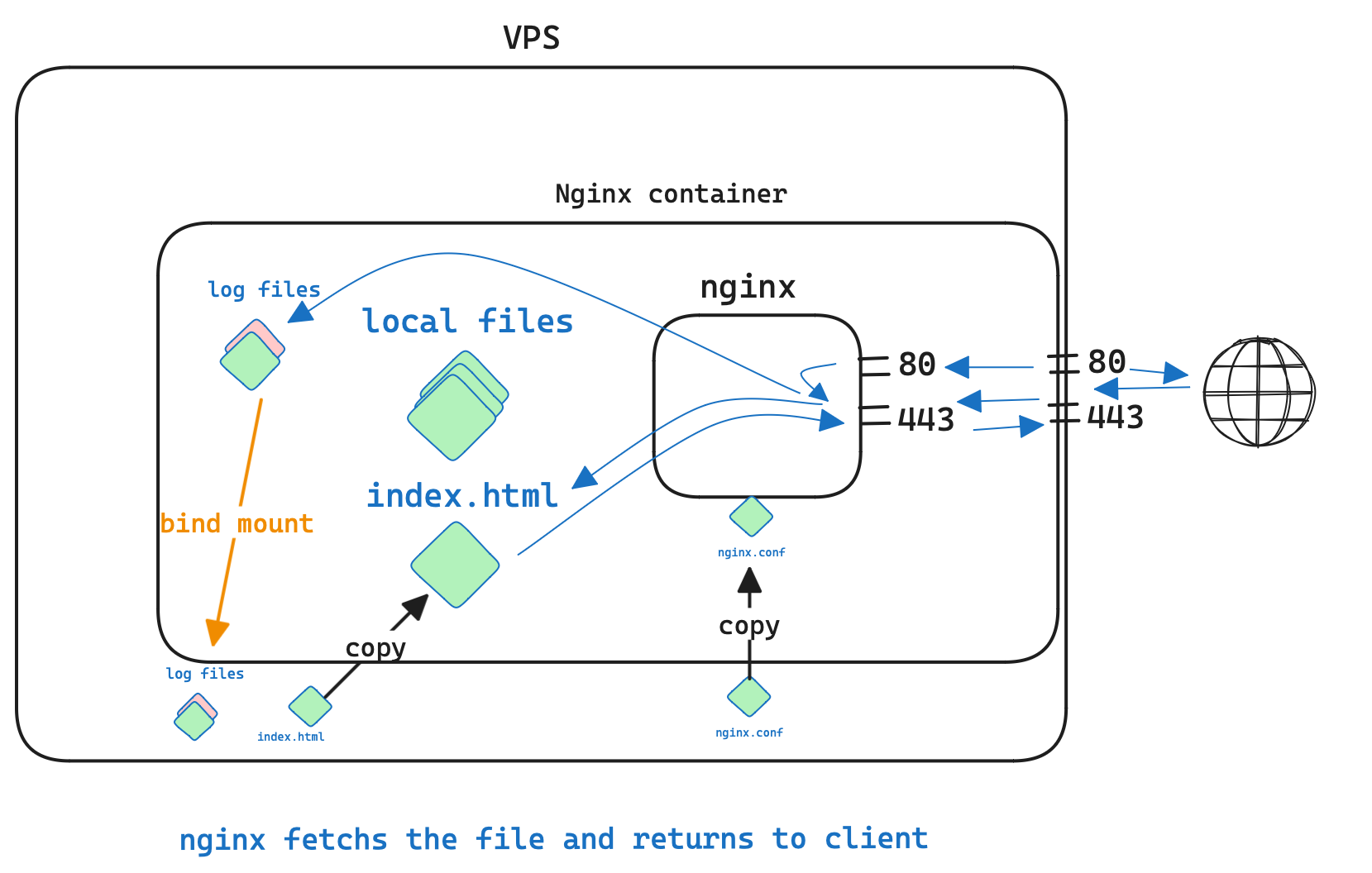

Let’s see how docker can improve this flow:

Improvements:

We actually did the same thing but all we deal with now is the important files. Like nginx.conf, index.html or logs.

We did not deal with installing a software to our system other than docker itself.

Docker manages the iptables configurations so we do not need to update any firewall configuration

We can easily roll-back or stop the system, monitoring it as easy as typing docker ps

We know that the system would work anywhere that is Docker installed.